How to build a staging server with Docker, Jenkins and Traefik

~ Chapter 3 ~Writing invokable Jenkins build jobs

This guide is largely outdated at this point. You should instead consider installing Jenkins on Kubernetes with Helm.

In this step we are going to configure your first pipeline and make Jenkins aware of a code repository’s activity.

Doing so will make Jenkins start watching the repository for code changes and allow builds to automatically be ran and configured at build time through a required Jenkinsfile located at the connected projects’ root directory.

Make building blocks

While one can write all of the code required by a job directly in a single Jenkinsfile, it is more elegant to extract reusable processes into additional Jenkins jobs. This gives the luxury of performing updates on the job without having to go through each projects.

Docker in Docker

Apart from a clearer expression of intention, there is also the issue of running Docker in Docker if you don’t split the jobs. You cannot easily reference the host server’s Docker instance while you are inside a build container.

For example, you may be building an application in a Docker container based on php:7.2. After the build, you wouldn’t be able to save the build to the registry because you would need to call Docker from within the PHP container which is already in a Docker instance.

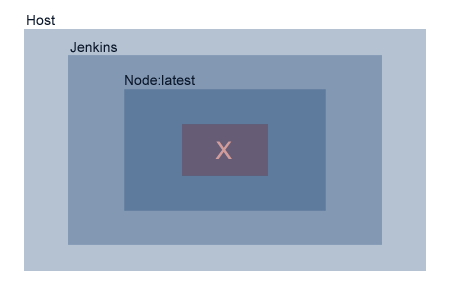

Alternatively, you could be running a build that needs to do a quick docker run of something as one of the steps. If that build is not running in the host container (agent any) then it would not be able to invoke the Docker binary. Here is a visual example of one trying to docker run -it compose:latest compose install from the node:latest image:

Jenkins does not like Docker in Docker

Jenkins does not like Docker in Docker

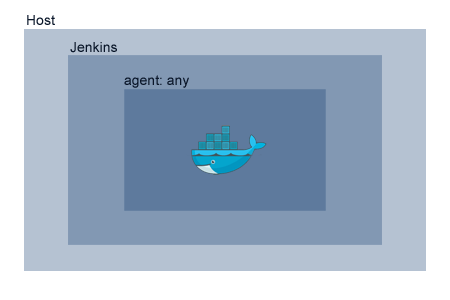

If, on the other hand, you delegate the same Docker operation to another Jenkins job not running as a child container, that job will be allowed access to the Docker socket as it remains at the same level as the Jenkins container.

Staying one level deep works well

Staying one level deep works well

Missing binaries

A third reason for why you may need to split your jobs is that often times images like the previously used php:7.2 image in the first example ships with most GNU/Linux tools like vi, cat and so on stripped out.

These binaries will surely be required by deployments at one point or another. This is why we’ve installed additional utilities inside our custom version of the Jenkins image we have built during the previous chapter.

Create the job to create images

Create another new repository that will only handle the logic of how Docker images are built and saved. This repository will only contain a single Jenkinsfile. Loading that project as a new Pipeline in Jenkins will allow you to invoke it later on in other Pipelines when you build your applications.

The job will have to be aware it can be invoked either as a commit on itself (from a trigger on the project repository) or as a sub-job in another Jenkins process (explicitly from another job’s Jenkinsfile).

A convention I want to enforce is to only allow Docker image configuration to come from either a Dockerfile at the root of the project or through a script located under dockerfiles/build.

pipeline {

options {

buildDiscarder(logRotator(numToKeepStr: '5'))

}

post { always { deleteDir() } }

agent any

stages {

stage('Deploy') {

when { expression { params.DEPLOY_JOB_NAME == null } }

steps {

echo "OK"

}

}

stage('Docker') {

when { expression { params.DEPLOY_JOB_NAME != null } }

steps {

script {

def JOB_TAG_SECTIONS = params.DEPLOY_JOB_NAME.split('/')

def NAME = JOB_TAG_SECTIONS[0]

def BRANCH = JOB_TAG_SECTIONS[1]

def REGISTRY_URL = docker-registry.your_domain/${NAME}:${BRANCH}"</span>

def DOCKER_ARGS = "-t ${REGISTRY_URL}"

if (params.ADDITIONAL_ARGUMENTS != null) {

DOCKER_ARGS = "${DOCKER_ARGS} ${params.ADDITIONAL_ARGUMENTS}"

}

echo """

==> Configuration

--> Names

Job name: ${params.DEPLOY_JOB_NAME}

Project name: ${NAME}

Branch: ${BRANCH}

Repository URL: ${REGISTRY_URL}

Arguments: ${params.ADDITIONAL_ARGUMENTS}

--> Directories:

Working directory: ${params.SOURCE_DIRECTORY}

"""

if ("${BRANCH}" =~ /^(master|develop|feature*)/) {

if (fileExists("${params.SOURCE_DIRECTORY}/dockerfiles/build")) {

echo "--> Found custom build script @ ${params.SOURCE_DIRECTORY}/dockerfiles/build"

echo "--> This script will have to tag and push otherwise nothing gets saved!"

sh "chmod +x ${params.SOURCE_DIRECTORY}/dockerfiles/build"

sh "${params.SOURCE_DIRECTORY}/dockerfiles/build ${params.SOURCE_DIRECTORY} ${NAME} ${BRANCH}"

} else if (fileExists("${params.SOURCE_DIRECTORY}/Dockerfile")) {

echo "--> Found project Dockerfile"

echo "--> Building image tagged ${REGISTRY_URL}"

sh "docker image build ${DOCKER_ARGS} ${params.SOURCE_DIRECTORY}"

echo "--> Pushing tag to registry ${REGISTRY_URL}"

sh "docker push ${REGISTRY_URL}"

if ("${BRANCH}" == "master") {

def LATEST_REGISTRY_URL = "docker-registry.your_domain/${NAME}:latest"

def LATEST_DOCKER_ARGS = "-t ${LATEST_REGISTRY_URL}"

if (params.ADDITIONAL_ARGUMENTS != null) {

LATEST_DOCKER_ARGS = "${LATEST_DOCKER_ARGS} ${params.ADDITIONAL_ARGUMENTS}"

}

echo "--> Building image tagged ${LATEST_REGISTRY_URL}"

sh "docker image build ${LATEST_DOCKER_ARGS} ${params.SOURCE_DIRECTORY}"

echo "--> Pushing tag to registry ${LATEST_REGISTRY_URL}"

sh "docker push ${LATEST_REGISTRY_URL}"

}

} else {

error("No supported method of making a Docker image was found in this build")

}

} else {

echo "--> ${BRANCH} is an unsupported branch name for automation."

}

}

}

}

}

}Create the job to deploy images

Again, create a new repository that will only handle the deployment logic of Docker images.

Internal conventions also need to be defined at this point. I have settled on docker-compose.staging.yml as the naming convention for how projects are expected to define their staging stacks.

pipeline {

options {

buildDiscarder(logRotator(numToKeepStr: '5'))

}

post { always { deleteDir() } }

agent any

stages {

stage('Deploy') {

when { expression { params.DEPLOY_JOB_NAME == null } }

steps {

echo "OK"

}

}

stage('Docker') {

when { expression { params.DEPLOY_JOB_NAME != null } }

steps {

script {

def JOB_TAG_SECTIONS = params.DEPLOY_JOB_NAME.split('/')

def NAME = JOB_TAG_SECTIONS[0]

def BRANCH = JOB_TAG_SECTIONS[1].replace("/", ".")

def REGISTRY_URL = "${params.REPOSITORY}/${NAME}:${BRANCH}"

def ENVIRONMENT = params.ENVIRONMENT

def COMPOSE_YML = "docker-compose.${ENVIRONMENT}.yml"

echo """

==> Configuration

--> Names

Job name: ${params.DEPLOY_JOB_NAME}

Project name: ${NAME}

Branch: ${BRANCH}

Repository URL: ${REGISTRY_URL}

Environment: ${ENVIRONMENT}

--> Directories:

Working directory: ${params.SOURCE_DIRECTORY}

"""

if ("${BRANCH}" =~ /^(master|develop|feature*)/) {

if (fileExists("${params.SOURCE_DIRECTORY}/${COMPOSE_YML}")) {

echo "--> Found ${COMPOSE_YML};"

echo "--> Customizing domain names for branch ${BRANCH}"

sh "sed -i -e \"s/Host:/Host:${BRANCH}./g\" ${params.SOURCE_DIRECTORY}/${COMPOSE_YML}"

sh "sed -i -e \"s/:master/:${BRANCH}/g\" ${params.SOURCE_DIRECTORY}/${COMPOSE_YML}"

echo "--> Deploying"

docker stack deploy -c ${params.SOURCE_DIRECTORY}/${COMPOSE_YML} --prune ${NAME}-${BRANCH}

} else {

echo "--> Did not find ${COMPOSE_YML};"

error("--> Looks like this project is not configured for running as ${ENVIRONMENT}")

}

} else {

echo "--> ${BRANCH} is an unsupported branch name for automation."

}

}

}

}

}

}Add the pipelines to Jenkins

Once you have these files committed in two different repositories, import them both as new Jenkins pipelines. Take note of the name of your repository as it will define the key jobs invoking this predefined deployment process must use.

Step completion checklist

- Created a new git project to hold the image creation’s

Jenkinsfile - Created a new git project to hold the deployment’s

Jenkinsfile - Imported the project as a new Jenkins Pipeline

- Received green builds for both

With reusable build and deploy jobs defined, you may now configure your project’s deployment configuration.